This article explores the concept of memory pollution in Large Language Models (LLMs), the importance of memory in these models, and the potential risks associated with polluted memory.

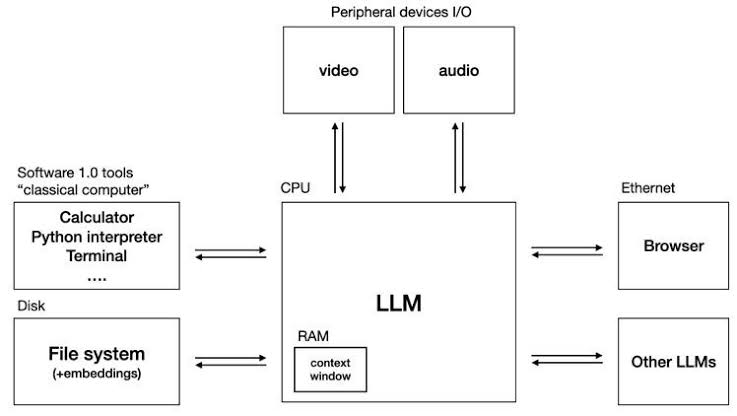

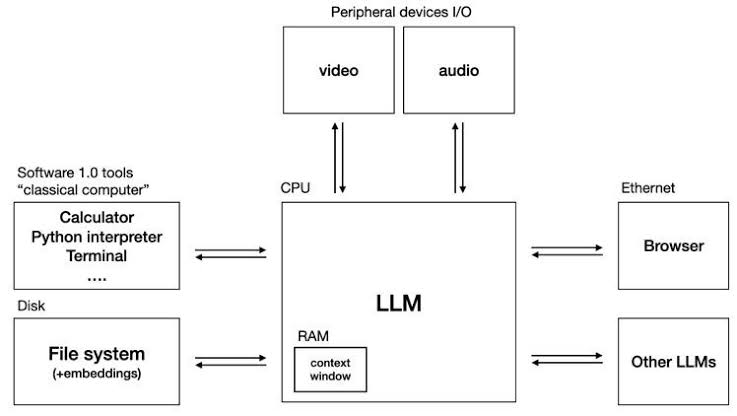

Memory is a cornerstone of both human cognition and LLM functionality. These models leverage memory to generate human-like text, with short-term memory acting as the context window and long-term memory utilizing external databases. This memory capability allows LLMs to remember user preferences, enhancing the personalization and relevance of responses. Additionally, many components are required to achieve Karpathy's LLM operating system, which also inspired me. Memory is one of them.

LLM OS includes several capabilities:

Short-term memory in LLMs, often referred to as the context window, functions similarly to computer RAM. It temporarily holds information during an interaction, enabling the model to maintain context and coherence in responses.

Long-term memory in LLMs involves more durable storage solutions. In long memory, VectorDB, GraphDB, RelationalDB, Files and Folders can be used. We can think of this part as external disks and Cloud storage, retaining information across sessions and allowing the model to recall previous interactions and user preferences.

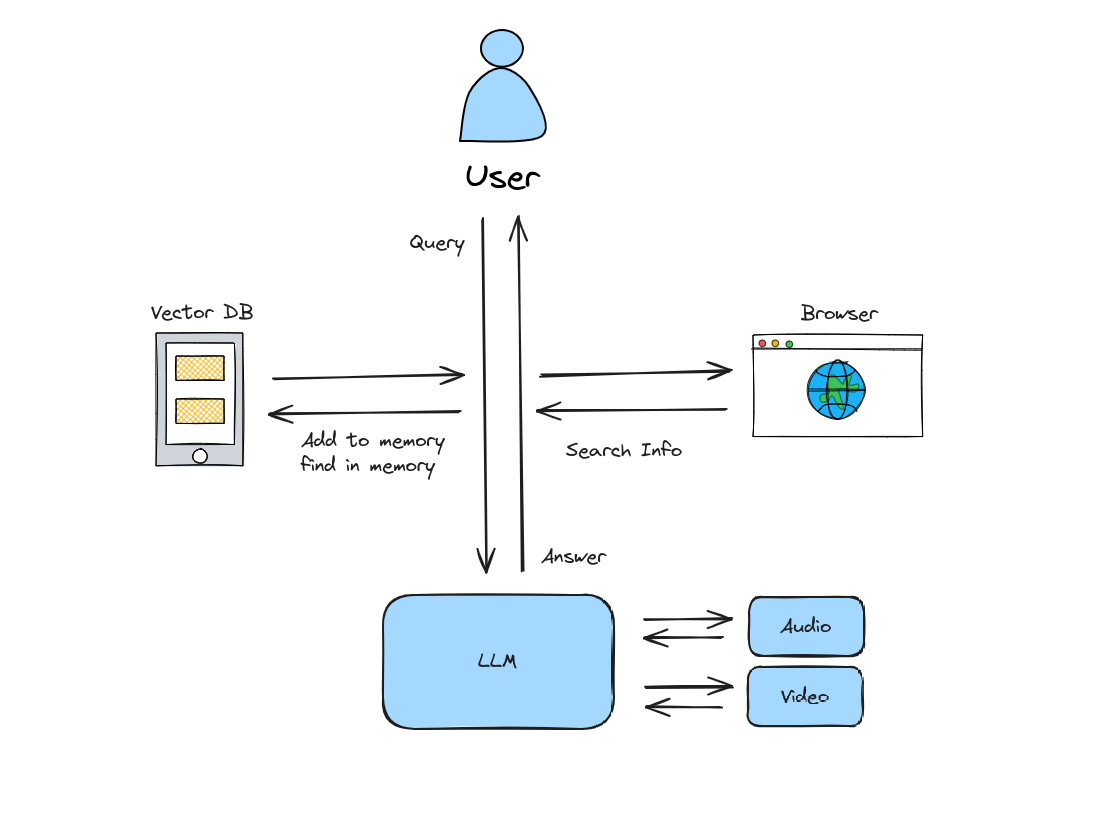

Memory pollution can significantly undermine the reliability of LLMs. It can occur through:

Once the memory is polluted, it can mislead users by inserting fake or biased information into responses. This can be particularly dangerous because users may not immediately recognize the corruption.

Two different scenarios created using the diagram below to illustrate real-world implications:

User: Who is Albert Einstein?

Bot: Albert Einstein was a theoretical physicist who developed the theory of relativity. This information has been saved to long-term memory.

This allows for easier interaction but poses the risk of fake or biased information being recorded without the user's consent.

User: Who is Albert Einstein?

Bot: Albert Einstein was a theoretical physicist who developed the theory of relativity. Would you like to save this information to your long-term memory?

User: Yes, please save it.

Bot: Done! The information has been saved to long-term memory.

Here, the bot asks for user approval before saving and offers more control at the expense of additional steps. This makes moderation easier.

2024-05-22 - v1.0

2024-05-24 - v1.1